I've been writing code for over a decade, and I've never seen the development landscape change as rapidly as it has in the past two years. We've gone from AI being a curiosity to AI assistants being essential tools in most developers' workflows.

But something strange is happening. Instead of making development more coherent, AI tools are creating a new kind of fragmentation—and most developers don't even realize it's happening.

The Promise vs. Reality

The promise was simple: AI would make coding faster, more creative, and more accessible. And in many ways, that's exactly what happened.

I can now prototype ideas at lightning speed. I can explore unfamiliar frameworks with an AI pair programmer guiding me. I can refactor complex codebases with confidence, knowing my AI assistant will catch edge cases I might miss.

This is what we call "vibe coding"—development that feels more like a conversation with an intelligent partner than traditional programming. It's creative, iterative, and surprisingly effective.

But here's the reality check: I'm now using four different AI coding assistants regularly, and they all give me different advice for the same problems.

The Fragmentation Problem

Let me paint you a picture of my current development setup:

Monday morning: I'm in Claude Code, working on a React component. Claude suggests using hooks in a specific pattern, recommends TypeScript interfaces, and understands my project uses Supabase.

Tuesday afternoon: I switch to Cursor for some refactoring. Cursor has no idea about my previous conversations with Claude. It suggests different patterns, recommends different libraries, and treats my TypeScript project like vanilla JavaScript.

Wednesday: I'm trying Windsurf for a complex debugging session. Again, zero context about my project. It gives generic advice that ignores my specific tech stack entirely.

Thursday: GitHub Copilot is suggesting completions based on... I'm not even sure what. Sometimes it's spot-on, sometimes it's completely irrelevant.

Each tool is powerful in isolation, but together they create a chaotic experience where I spend more time explaining my project context than actually coding.

Why This Happens (Technical Context)

The fragmentation isn't accidental—it's structural. Each AI coding platform was built with different assumptions about how developers work:

Claude Code assumes you'll have long-form conversations with memory persistence across sessions. It's optimized for exploration and learning.

Cursor focuses on real-time code completion and refactoring. It's built for developers who want AI suggestions as they type.

Windsurf emphasizes workspace awareness and complex debugging. It's designed for developers working on large, complex projects.

GitHub Copilot prioritizes code completion based on massive training data. It's optimized for common patterns and popular libraries.

These are all valid approaches, but they're fundamentally incompatible. When you switch between tools, you lose all project context, coding patterns, and accumulated knowledge about your specific setup.

The Infrastructure Gap

Here's what I realized: We're solving the wrong problem.

Most companies are building better AI models or more sophisticated interfaces. But the real problem isn't AI quality—it's AI coordination.

Your AI assistant doesn't know:

- What framework you're actually using (not just what's popular)

- Your team's specific coding conventions

- Your project's architecture and patterns

- Your preferred libraries and approaches

- Your current context and recent decisions

This isn't a model problem or a UI problem. It's an infrastructure problem.

The First Solution: VDK

After months of this fragmented experience, our team decided to build something different. Not another AI assistant, but the infrastructure layer that makes existing AI assistants work better together.

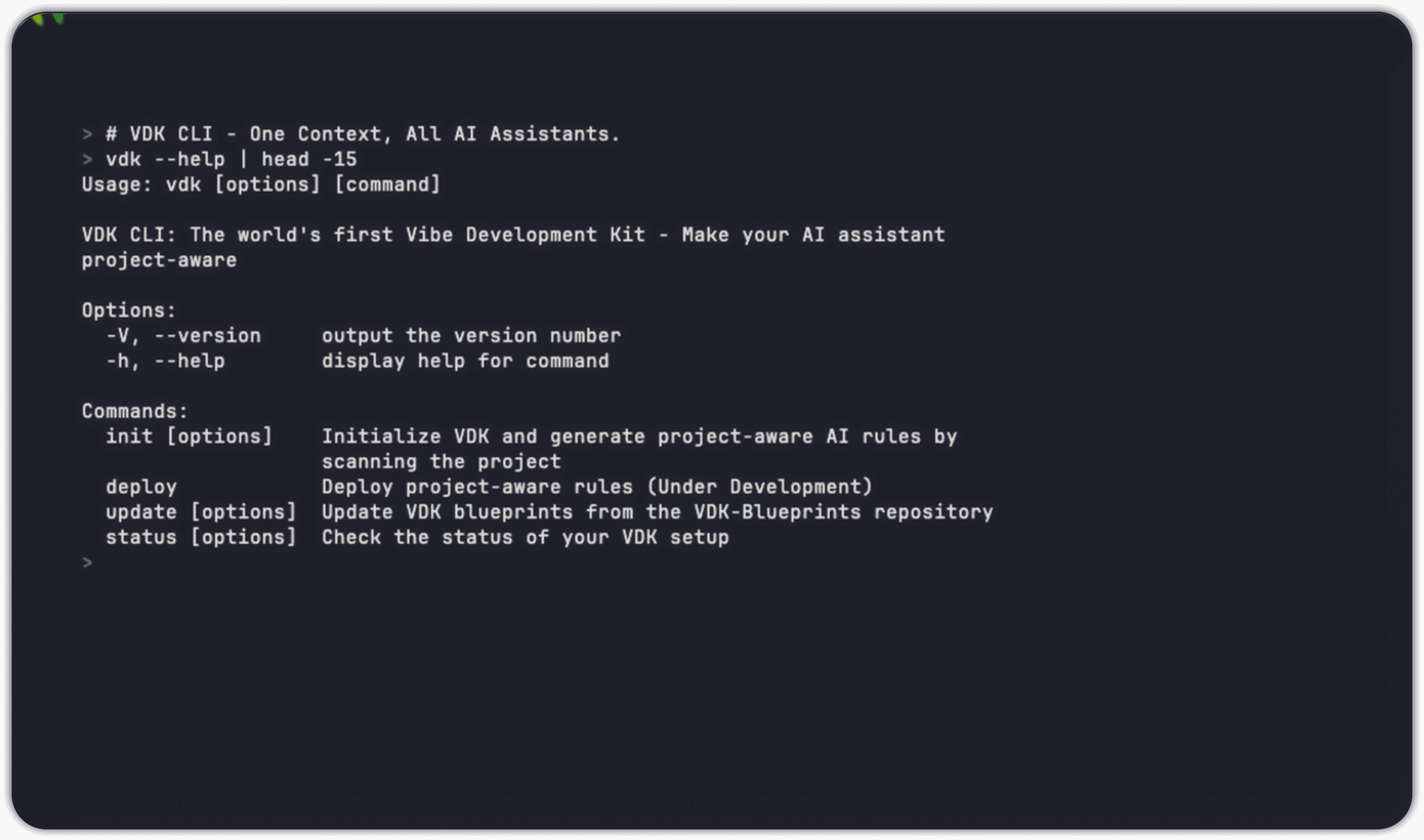

We call it VDK—the Vibe Development Kit. It's the world's first tool built specifically for the vibe coding era.

Here's the core insight: Instead of fighting fragmentation, bridge it.

VDK analyzes your actual project—your tech stack, your patterns, your conventions—and generates appropriate configuration files for every major AI coding platform.

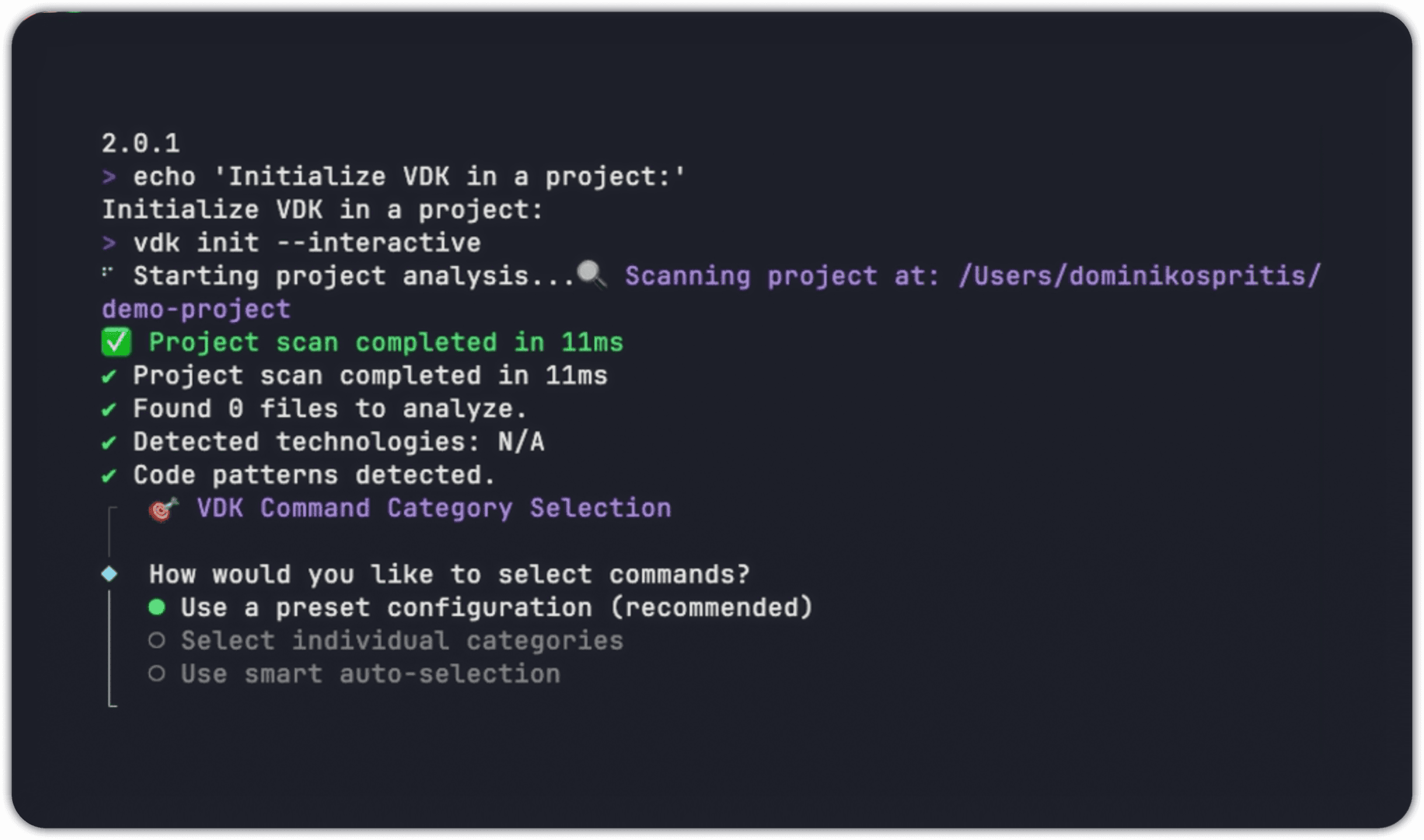

One command:

vdk init

Result: Claude, Cursor, Windsurf, and Copilot all understand your specific project context.

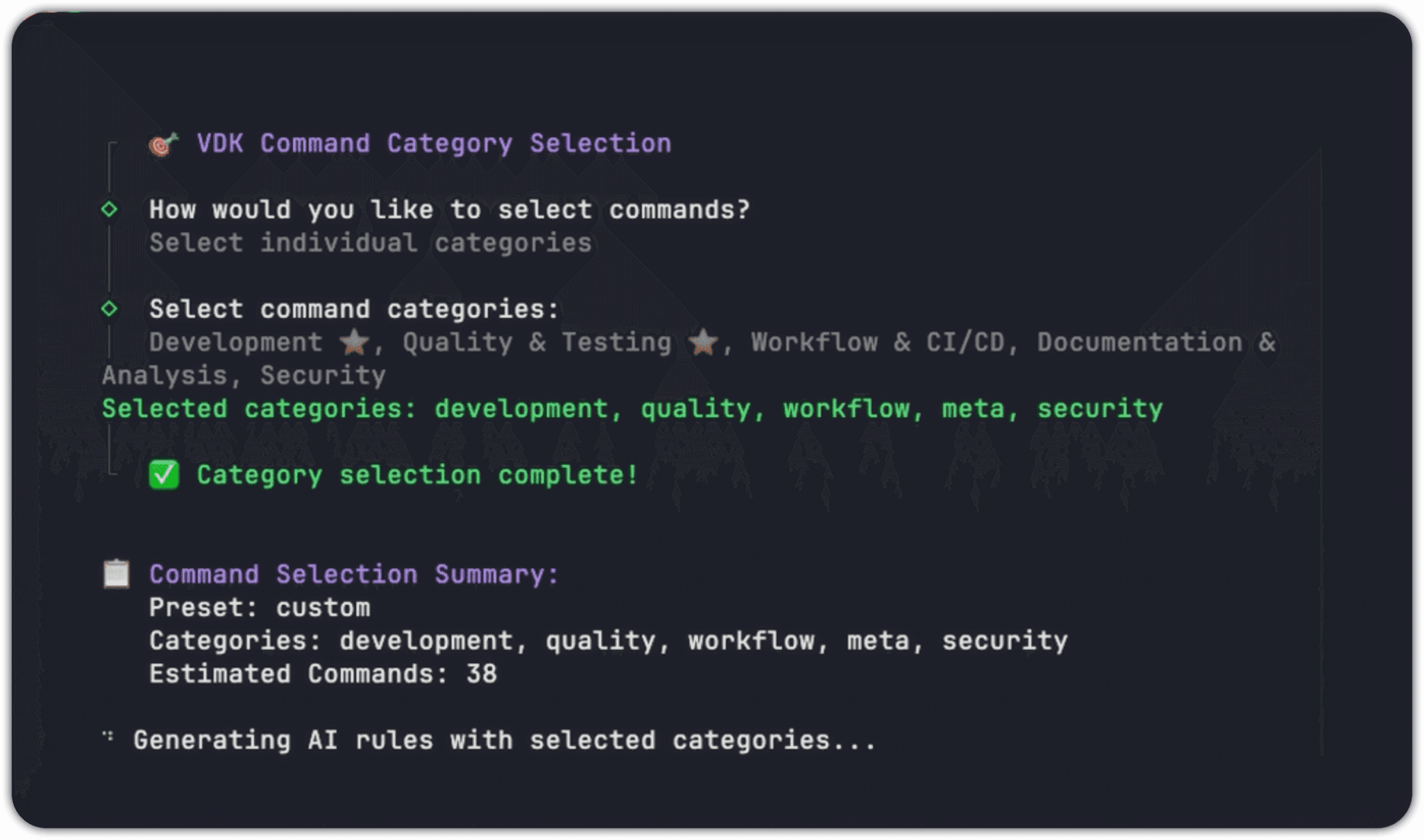

The CLI analyzes your project structure, detects technologies, and generates appropriate configurations:

Real-time project analysis showing detected technologies and generated configurations

How It Actually Works

The technical implementation is straightforward but effective:

1. Project Analysis

VDK scans your codebase and identifies:

- Frameworks and libraries you're actually using

- Your coding patterns and conventions

- Project structure and architecture

- Build tools and deployment targets

// Real-world analysis results

{

"framework": "next.js-15",

"language": "typescript",

"database": "supabase",

"styling": "tailwind-4",

"patterns": {

"architecture": "app-router",

"components": "functional-tsx",

"styling": "utility-first"

}

}2. Knowledge Application

It pulls from a curated library of 109 blueprints covering modern development practices. These aren't generic templates—they're specific guidance for combinations like "Next.js 15 + TypeScript + Supabase" or "React Native + Expo + TypeScript."

3. Universal Configuration

VDK generates platform-specific configuration files:

.claude/CLAUDE.md:

# Project Context: Next.js 15 + TypeScript + Supabase

## Tech Stack

- Framework: Next.js 15 (App Router)

- Language: TypeScript 5.8+

- Database: Supabase (PostgreSQL)

- Styling: Tailwind CSS 4

## Coding Patterns

When suggesting code:

- Use Server Components by default

- Implement proper TypeScript types

- Follow Supabase best practices for data fetching

- Use Tailwind utility classes consistently.cursor/rules/nextjs-typescript.mdc:

---

patterns: ["app/**/*.tsx", "app/**/*.ts"]

priority: 100

---

# Next.js 15 + TypeScript Rules

## Server Component Patterns

- Default to Server Components

- Use 'use client' only when necessary

- Implement proper loading states.windsurf/rules/stack-config.xml:

<memory>

<context>Next.js 15 TypeScript Supabase project</context>

<patterns>App Router, Server Components, Supabase integration</patterns>

</memory>.github/copilot/guidelines.json:

{

"framework": "nextjs-15",

"language": "typescript",

"database": "supabase",

"patterns": ["app-router", "server-components"]

}Now when you ask any AI assistant about your project, it understands your specific context instead of giving generic advice.

VDK Interface Showcase

Configuration generation and deployment across multiple AI platforms

Status overview showing successful integration with all supported AI platforms

Early Results

I've been using VDK on projects for the past few months, and the difference is significant:

Before: "Can you help me create a user authentication flow?" AI Response: Generic React tutorial with class components, Redux, and outdated security practices.

After: "Can you help me create a user authentication flow?" AI Response: Next.js 15 App Router implementation using Supabase Auth, TypeScript interfaces, Server Components, and current security best practices.

The AI suggestions are now relevant, current, and consistent across platforms.

Performance Characteristics

The technical implementation is optimized for real-world usage:

CLI Analysis Speed:

- Small projects (<1K files): ~200ms

- Medium projects (1-10K files): ~800ms

- Large projects (10-50K files): ~3-5s

Blueprint Processing:

- Remote fetch: ~2-3s (with caching)

- Template generation: ~100-300ms per platform

- File deployment: ~50ms per configuration

Memory Usage:

- Analysis phase: ~30-50MB

- Template processing: ~10-20MB additional

- Runtime: ~5-10MB

Why This Matters Beyond Development

The fragmentation problem in AI coding tools is a microcosm of a larger challenge we're facing across AI applications. As AI becomes more capable and more ubiquitous, we're going to have dozens of specialized AI tools for different tasks.

Without coordination infrastructure, we'll end up with powerful but isolated AI systems that can't work together effectively.

VDK is our attempt to solve this coordination problem in the development domain. But the pattern—analyze context, apply relevant knowledge, coordinate across platforms—applies to many other areas where AI fragmentation is emerging.

The Community Aspect

One thing that's surprised me about VDK's development is how much the community component matters. The blueprint library that powers VDK isn't just code we wrote—it's curated knowledge from experienced developers working with modern frameworks and patterns.

When someone contributes a blueprint for a new framework or updates patterns for a recent library version, every VDK user benefits. The AI assistants get better guidance, and the overall quality of AI-generated code improves.

It's a network effect: more contributors mean better blueprints, which means more accurate AI assistance, which attracts more users and contributors.

What We're Learning

Building VDK has taught us several things about the AI coding landscape:

1. Context is everything. The difference between useful and irrelevant AI suggestions often comes down to understanding project context.

2. Fragmentation is accelerating. New AI coding tools launch monthly, each with different strengths and incompatible approaches to context management.

3. Developers want coordination, not replacement. Most developers don't want to commit to a single AI tool—they want to use different tools for different tasks while maintaining consistent project understanding.

4. Infrastructure beats features. Better models and fancier interfaces matter less than basic coordination between existing tools.

The Challenges Ahead

VDK solves the immediate fragmentation problem, but there are bigger challenges on the horizon:

Platform Evolution: AI coding tools are changing rapidly. New platforms launch monthly, existing ones add new features, and compatibility requirements shift constantly.

Scale Complexity: As projects get larger and teams get bigger, the context coordination problem becomes exponentially more difficult.

Knowledge Curation: Maintaining high-quality blueprints for rapidly evolving frameworks and practices requires significant community effort.

Integration Depth: Current integrations are file-based, but deeper integration (like real-time context sharing) will require platform cooperation.

Where This Goes

I think we're still in the very early stages of the AI coding revolution. The current tools—Claude, Cursor, Windsurf, Copilot—are impressive, but they're also primitive compared to what's coming.

The question isn't whether AI will transform software development (it already has), but whether that transformation will be coherent or chaotic.

Will we end up with dozens of powerful but incompatible AI tools that developers have to juggle constantly? Or will we build coordination infrastructure that makes AI coding assistants work together effectively?

VDK is our bet on coordination over chaos. It's an attempt to build the infrastructure layer that makes the vibe coding era productive instead of frustrating.

Try It Yourself

If you're dealing with AI coding fragmentation in your own projects, VDK is open source and available now:

npm install -g @vibe-dev-kit/cli

vdk initIt takes about 60 seconds to analyze your project and configure your AI tools. No registration, no vendor lock-in, no commitments—just better AI assistance through proper project context.

The vibe coding era is here whether we're ready or not. The question is whether we'll build the infrastructure to make it work well.

Explore VDK and join the community at vdk.tools. Help us build the coordination infrastructure that makes AI coding coherent.